Google announced in early 2005 that hyperlinks with rel="nofollow" attribute would not influence the link target’s PageRank. In addition, the Yahoo and Windows Live search engines also respect this attribute.

How the attribute is being interpreted differs between the search engines. While some take it literally and do not follow the link to the page being linked to, others still “follow” the link to find new web pages for indexing. In the latter case rel="nofollow" actually tells a search engine “Don’t score this link” rather than “Don’t follow this link.” This differs from the meaning of nofollow as used within a robots meta tag, which does tell a search engine: “Do not follow any of the hyperlinks in the body of this document.”

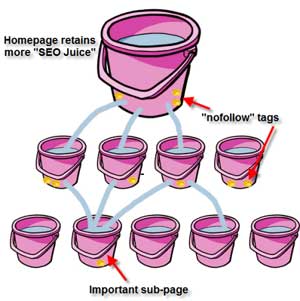

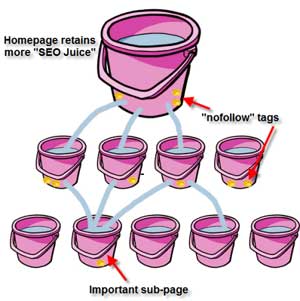

The effective usage of the rev=”nofollow” tag can improve selected pages Google PageRank by preventing the leakage of ‘Link Juice’ to non-essential (for site PageRank purposes) pages such Terms and Conditions, Privacy Policy pages etc. which do not require to be indexed by search engines, but which can, if “nofollow” is not used, dilute the optimim PagerRank for your essential pages such as product descriptions etc.

Learning to implement “nofollow†tags is fairly easy. Learning how to apply them in the proper way does require some skill.

The use of “nofollow†tags can serve many different purposes. They can be used to limit the amount of ‘link juice’ that flows out of a page to external pages of different domains, or they can be used to control where the ‘link juice’ will flow to within a site and its internal pages.

This post is about the use of “nofollow†tags to control the amount of link juice flowing within a site and its internal pages. To better explain this I came up with an illustration that should help the “not so technical†crowd understand this process.

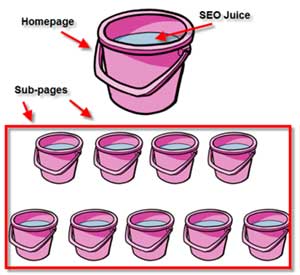

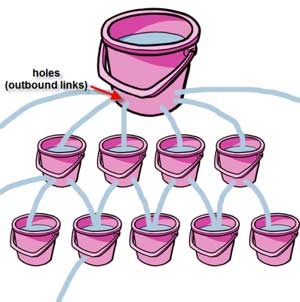

If you would, please visualize your homepage as a bucket, and the subpages as sub-buckets. See the image below:

Your total search engine authority can be represented by what I’m going to call SEO Juiceâ€.

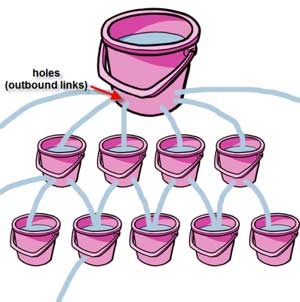

Now, imagine that every link you have, in every page of your site, is a hole in the bucket. Once the different Search Engines pour their “SEO Juice†into your homepage bucket, the juice leaks out to your sub-pages, and external pages, through every link you have.

The problem is that some of your sub-buckets (sub-pages) don’t need that “SEO Juice†as much, while others need a lot of it. A good example is having those “Privacy Policyâ€, “Shipping Info†types of pages that really don’t need to rank highly in any SERP. So, instead of spreading your “SEO Juice†thin, you’d direct it to where it is most needed. Your site could have an extremely relevant, and high converting sub-page that you want to boost, this would be a good place to start.

The “nofollow†tags help you plug the holes of different buckets and let most of the juice flow where you want more Search Engine authority. See the image below:

Once you’ve drawn the “nofollow†strategy map for your site, and decide on what pages need more search engine authority, the implementation part is quite simple.

Now that you understand what “nofollow†tags can do for your site, make sure you look into taking advantage of this awesome tool, and take control of where your “SEO Juice†is flowing!

Still confused? Let us help you out! Check out our full list of search engine optimization services.

Related Posts: